|

|

| (167 intermediate revisions by the same user not shown) |

| Line 15: |

Line 15: |

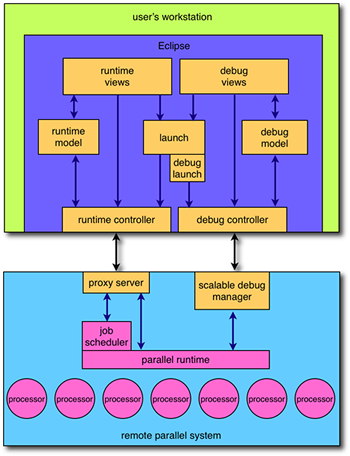

| | The PTP architecture has been designed to address these requirements. The following diagram provides an overview of the overall architecture. | | The PTP architecture has been designed to address these requirements. The following diagram provides an overview of the overall architecture. |

| | | | |

| − | [[Image:ptp20_arch.png]] | + | [[Image:ptp20_arch.png|center]] |

| | | | |

| | The architecture can be roughly divided into three major components: the ''runtime platform'', the ''debug platform'', and ''tool integration services''. These components are defined in more detail in the following sections. | | The architecture can be roughly divided into three major components: the ''runtime platform'', the ''debug platform'', and ''tool integration services''. These components are defined in more detail in the following sections. |

| | | | |

| − | == Runtime Platform == | + | == [[PTP/designs/2.x/runtime_platform | Runtime Platform]] == |

| | | | |

| − | The runtime platform comprises those elements relating to the launching, controlling, and monitoring of parallel applications. The runtime platform is comprised of the following elements in the architecture diagram:

| + | This [[PTP/designs/2.x/runtime_platform | section]] describes the runtime monitoring and control features of PTP. |

| | | | |

| − | * runtime model

| + | == [[PTP/designs/2.x/launch_platform | Launch Platform]] == |

| − | * runtime views

| + | |

| − | * resource manager

| + | |

| − | * runtime system

| + | |

| − | * remote proxy runtime

| + | |

| − | * proxy runtime

| + | |

| − | * proxy client

| + | |

| − | * proxy server

| + | |

| − | * launch

| + | |

| | | | |

| − | PTP follows a model-view-controller (MVC) design pattern. The heart of the architecture is the ''runtime model'', which provides an abstract representation of the parallel system and the running applications. ''Runtime views'' provide the user with visual feedback of the state of the model, and provide the user interface elements that allow jobs to be launched and controlled. The ''resource manager'' is an abstraction of a resource management system, such as a job scheduler, which is typically responsible for controlling user access to compute resources via queues. The resource manager is responsible for updating the runtime model. The resource manager communicates with the ''runtime system'', which is an abstraction of a parallel runtime system, and is typically responsible for node-to-process allocation, launching processes, and other communication services. The runtime system, in turn, communicates with the ''remote proxy runtime'', which manages proxy communication via some form of remote service. The ''proxy runtime'' is used to map the runtime system interface onto a set of commands and events that are used to communicate with a physically remote system. The proxy runtime makes use of the ''proxy client'' to provide client-side communication services for managing proxy communication. All of these elements are Eclipse plugins. | + | This [[PTP/designs/2.x/launch_platform | section]] describes the services for launching debug and non-debug jobs. |

| | | | |

| − | The ''proxy server'' is a small program that typically runs on the remote system front-end, and is responsible for performing local actions in response to commands sent by the proxy client. The results of the actions are return to the client in the form of events. The proxy server is usually written in C. The proxy client/server protocol is defined in more detail [[PTP/designs/rm_proxy|here]].

| + | == [[PTP/designs/2.x/debug_platform | Debug Platform]] == |

| | | | |

| − | The final element is ''launch'', which is responsible for managing Eclipse launch configurations, and translating these into the appropriate actions required to initiate the launch of an application on the remote parallel machine.

| + | This [[PTP/designs/2.x/debug_platform | section ]] describes the features and operation of the PTP parallel debugger. |

| | | | |

| − | Each of these elements is described in more detail below.

| + | == [[PTP/designs/2.x/remote_services | Remote Services]] == |

| | | | |

| − | === Runtime Model ===

| + | This [[PTP/designs/2.x/remote_services | section]] describes the remote services framework that PTP uses for communication with remote systems. |

| − | | + | |

| − | ==== Overview ====

| + | |

| − | | + | |

| − | The PTP runtime model is an hierarchical attributed parallel system model that represents the state of a remote parallel system at any particular time. The model is ''attributed'' because each model element can contain an arbitrary list of typed key/value pairs that represent attributes of the real object. For example, an element representing a compute node could contain attributes describing the hardware configuration of the node. The structure of the runtime model is shown in the diagram below.

| + | |

| − | | + | |

| − | [[Image:model20.png]]

| + | |

| − | | + | |

| − | Each of the model elements in the hierarchy is a logical representation of a system component. Particular operations and views provided by PTP are associated with each type of model element. Since machine hardware and architectures can vary widely, the model does not attempt to define any particular physical arrangement. It is left to the implementor to decide how the model elements map to the physical machine characteristics.

| + | |

| − | | + | |

| − | ; '''universe''' : This is the top-level model element, and does not correspond to a physical object. It is used as the entry point into the model.

| + | |

| − | | + | |

| − | ; '''resource manager''' : This model element corresponds to an instance of a resource management system on a remote computer system. Since a computer system may provide more than one resource management system, there may be multiple resource managers associated with a particular physical computer system. For example, host A may allow interactive jobs to be run directly using the MPICH2 runtime system, or batched via the LSF job scheduler. In this case, there would be two logical resource managers: an MPICH2 resource manager running on host A, and an LSF resource manager running on host A.

| + | |

| − | | + | |

| − | ; '''machine''' : This model element provides a grouping of computing resources, and will typically be where the resource management system is accessible from, such as the place a user would normally log in. Many configurations provide such a front-end machine.

| + | |

| − | | + | |

| − | ; '''queue''' : This model element represents a logical queue of jobs waiting to be executed on a machine. There will typically be a one-to-one mapping between a queue model element and a resource management system queue. Systems that don't have the notion of queues should map all jobs on to a single ''default'' queue.

| + | |

| − | | + | |

| − | ; '''node''' : This model element represents some form of computational resource that is responsible for executing an application program, but where it is not necessary to provide any finer level of granularity. For example, a cluster node with multiple processors would normally be represented as a node element. An SMP machine would represent physical processors as a node element.

| + | |

| − | | + | |

| − | ; '''job''' : This model element represents an instance of an application that is to be executed.

| + | |

| − | | + | |

| − | ; '''process''' : This model element represents an execution unit using some computational resource. There is an implicit one-to-many relationship between a node and a process. For example, a process would be used to represent a Unix process. Finer granularity (i.e. threads of execution) are managed by the debug model.

| + | |

| − | | + | |

| − | ==== Attributes ====

| + | |

| − | | + | |

| − | Each element in the model can contain an arbitrary number of attributes. An attribute is used to provide additional information about the model element. An attribute consists of a ''key'' and a ''value''. They key is a unique ID that refers to an ''attribute definition'', which can be thought of as the type of the attribute. The value is the value of the attribute.

| + | |

| − | | + | |

| − | An attribute definition contains meta-data about the attribute, and is primarily used for data validation and displaying attributes in the user interface. This meta-data includes:

| + | |

| − | | + | |

| − | ; ID : The attribute definition ID.

| + | |

| − | | + | |

| − | ; type : The type of the attribute. Currently supported types are ARRAY, BOOLEAN, DATE, DOUBLE, ENUMERATED, INTEGER, and STRING.

| + | |

| − | | + | |

| − | ; name : The short name of the attribute. This is the name that is displayed in UI property views.

| + | |

| − | | + | |

| − | ; description : A description of the attribute that is displayed when more information about the attribute is requested (e.g. tooltip popups.)

| + | |

| − | | + | |

| − | ; default : The default value of the attribute. This is the value assigned to the attribute when it is first created.

| + | |

| − | | + | |

| − | ==== Pre-defined Attributes ====

| + | |

| − | | + | |

| − | All model elements have at least two mandatory attributes. These attributes are:

| + | |

| − | | + | |

| − | ; '''id''' : This is a unique ID for the model element.

| + | |

| − | | + | |

| − | ; '''name''' : This is a distinguishing name for the model element. It is primarily used for display purposes and does not need to be unique.

| + | |

| − | | + | |

| − | In addition, model elements have different sets of optional attributes. These attributes are shown in the following table:

| + | |

| − | | + | |

| − | {| border="1"

| + | |

| − | ! element !! attribute !! type !! description

| + | |

| − | |-

| + | |

| − | | rowspan="4" | resource manager

| + | |

| − | | rmState || enum || STARTING, STARTED, STOPPING, STOPPED, SUSPENDED, ERROR

| + | |

| − | |-

| + | |

| − | | rmDescription || string || Text describing this resource manager

| + | |

| − | |-

| + | |

| − | | rmType || string || Resource manager class

| + | |

| − | |-

| + | |

| − | | rmID || string || Unique identifier for this resource manager. Used for persistence

| + | |

| − | |-

| + | |

| − | | rowspan="2" | machine

| + | |

| − | | machineState || enum || UP, DOWN, ALERT, ERROR, UNKNOWN

| + | |

| − | |-

| + | |

| − | | numNodes || int || Number of nodes known by this machine

| + | |

| − | |-

| + | |

| − | | queue || queueState || enum || NORMAL, COLLECTING, DRAINING, STOPPED

| + | |

| − | |-

| + | |

| − | | rowspan="3" | node

| + | |

| − | | nodeState || enum || UP, DOWN, ERROR, UNKNOWN

| + | |

| − | |-

| + | |

| − | | nodeExtraState || enum || USER_ALLOC_EXCL, USER_ALLOC_SHARED, OTHER_ALLOC_EXCL, OTHER_ALLOC_SHARED, RUNNING_PROCESS, EXITED_PROCESS, NONE

| + | |

| − | |-

| + | |

| − | | nodeNumber || int || Zero-based index of the node

| + | |

| − | |}

| + | |

| − | | + | |

| − | ==== Implementation ====

| + | |

| − | | + | |

| − | === Runtime Views ===

| + | |

| − | | + | |

| − | The runtime views serve two functions: they provide a means of observing the state of the runtime model, and they allow the user to interact with the parallel environment. Access to the views is managed using an Eclipse ''perspective''. A perspective is a means of grouping views together so that they can provide a cohesive user interface. The perspective used by the runtime platform is called the '''PTP Runtime''' perspective.

| + | |

| − | | + | |

| − | There are currently four main views provided by the runtime platform:

| + | |

| − | | + | |

| − | ; '''resource manager view''' : This view is used to manage the lifecycle of resource managers. Each resource manager model element is displayed in this view. A user can add, delete, start, stop, and edit resource managers using this view. A different color icon is used to display the different states of a resource manager.

| + | |

| − | | + | |

| − | === Resource Manager ===

| + | |

| − | | + | |

| − | === Runtime System ===

| + | |

| − | | + | |

| − | === Remote Proxy Runtime ===

| + | |

| − | | + | |

| − | === Proxy Runtime ===

| + | |

| − | | + | |

| − | === Proxy Client ===

| + | |

| − | | + | |

| − | === Proxy Server ===

| + | |

| − | | + | |

| − | === Launch ===

| + | |

| − | | + | |

| − | == Debug Platform ==

| + | |

| − | | + | |

| − | The debug platform comprises those elements relating to the debugging of parallel applications. The debug platform is comprised of the following elements in the architecture diagram:

| + | |

| − | | + | |

| − | * debug model

| + | |

| − | * debug views

| + | |

| − | * PDI

| + | |

| − | * SDM proxy

| + | |

| − | * proxy debug

| + | |

| − | * proxy client

| + | |

| − | * scalable debug manager

| + | |

| − | | + | |

| − | The ''debug model'' provides a representation of a job and its associated processes being debugged. The ''debug views'' allow the user to interact with the debug model, and control the operation of the debugger. The ''parallel debug interface (PDI)'' is an abstract interface that defines how to interact with the job being debugged. Implementations of this interface provide the concrete classes necessary to interact with a real application. The ''SDM proxy'' provides an implementation of PDI that communicates via a proxy running on a remote machine. The ''proxy debug'' layer implements a set of commands and events that are used for this communication, and that use the ''proxy client'' communication services (the same proxy client used by the runtime platform). All of these elements are implemented as Eclipse plugins.

| + | |

| − | | + | |

| − | The ''scalable debug manager'' is an external program that runs on the remote system. It manages the debugger communication between the proxy client and low-level debug engines that control the debug operations on the application processes.

| + | |

| − | | + | |

| − | Each of these elements is described in more detail below.

| + | |

| − | | + | |

| − | === Debug Model ===

| + | |

| − | | + | |

| − | === Debug User Interface ===

| + | |

| − | | + | |

| − | === Debug Controller ===

| + | |

| − | | + | |

| − | === Debug API ===

| + | |

| − | | + | |

| − | === Scalable Debug Manager ===

| + | |

| − | | + | |

| − | == Tool Integration Services ==

| + | |

The Parallel Tools Platform (PTP) is a portable, scalable, standards-based integrated development environment specifically suited for application development for parallel computer architectures. The PTP combines existing functionality in the Eclipse Platform, the C/C++ Development Tools, and new services specifically designed to interface with parallel computing systems, to enable the development of parallel programs suitable for a range of scientific, engineering and commercial applications.

This document provides a detailed design description of the major elements of the Parallel Tools Platform version 2.x.

The Parallel Tools Platform provides an Eclipse-based environment for supporting the integration of development tools that interact with parallel computer systems. PTP provides pre-installed tools for launching, controlling, monitoring, and debugging parallel applications. A number of services and extension points are also provided to enable other tools to be integrated with Eclipse an fully utilize the PTP functionality.

Unlike traditional computer systems, launching a parallel program is a complicated process. Although there is some standardization in the way to write parallel codes (such as MPI), there is little standardization in how to launch, control and interact with a parallel program. To further complicate matters, many parallel systems employ some form of resource allocation system, such as a job scheduler, and in many cases execution of the parallel program must be managed by the resource allocation system, rather than by direct invocation by the user.

In most parallel computing environments, the parallel computer system is remote from the user's location. This necessitates that the parallel runtime environment be able to communicate with the parallel computer system remotely.

The PTP architecture has been designed to address these requirements. The following diagram provides an overview of the overall architecture.