Notice: this Wiki will be going read only early in 2024 and edits will no longer be possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Linux Tools Project/TMF/CTF guide

This article is not finished. Please do not modify it until this label is removed by its original author. Thank you.

| Linux Tools | |

| Website | |

| Download | |

| Community | |

| Mailing List • Forums • IRC • mattermost | |

| Issues | |

| Open • Help Wanted • Bug Day | |

| Contribute | |

| Browse Source |

This article is a guide about using the CTF component of Linux Tools (org.eclipse.linuxtools.ctf.core). It targets both users (of the existing code) and developers (intending to extend the existing code).

You might want to jump directly to examples if you prefer learning this way. Section CTF general information briefly explains CTF while section org.eclipse.linuxtools.ctf.core is a reference of the Linux Tools' CTF component classes and interfaces.

Contents

CTF general information

This section discusses the CTF format.

What is CTF?

CTF (Common Trace Format) is a generic trace binary format defined and standardized by EfficiOS. Although EfficiOS is the company maintaining LTTng (LTTng is using CTF as its sole output format), CTF was designed as a general purpose format to accommodate basically any tracer (be it software/hardware, embedded/server, etc.).

CTF was designed to be very efficient to produce, albeit rather difficult to decode, mostly due to the metadata parsing stage and dynamic scoping support.

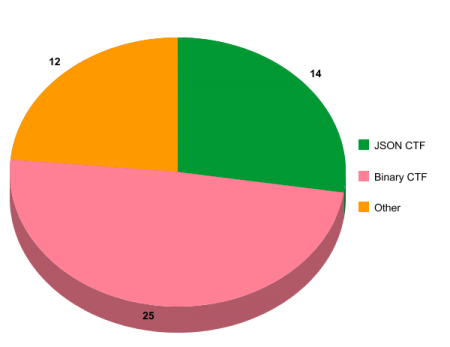

We distinguish two flavours of CTF in the next sections: binary CTF refers to the official binary representation of a CTF trace and JSON CTF is a plain text equivalent of the same data.

Binary CTF anatomy

This article does not cover the full specification of binary CTF; the official specification already does this.

Basically, the purpose of a trace is to record events. A binary CTF trace, like any other trace, is thus a collection of events data.

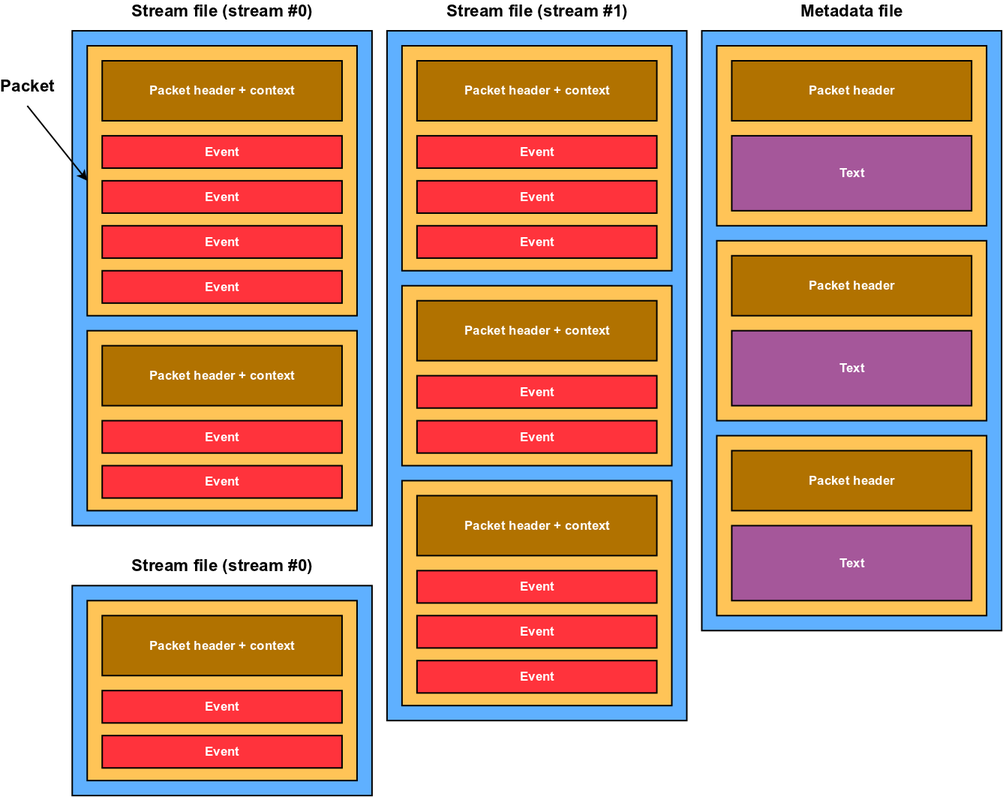

Here is a binary CTF trace:

Stream files

A trace is divided into streams, which may span over multiple stream files. A trace also includes a metadata file which is covered later.

There can be any number of streams, as long as they have different IDs. However, in most cases (at least at the time of writing this article), there is only one stream, which is divided into one file per CPU. Since different CPUs can generate different events at the same time, LTTng (the only tracer known to produce a CTF output) splits its only stream into multiple files. Please note: a single file cannot contain multiple streams.

In the image above, we see 3 stream files: 2 for stream with ID 0 and a single one for stream with ID 1.

A stream "contains" packets. This relation can be seen the other way around: packets contain a stream ID. A stream file contains nothing else than packets (no bytes before, between or after packets).

Packets

A packet is the main container of events. Events data cannot reside outside packets. Sometimes a packet may contain only one event, but it's still inside a packet.

Every packet starts with a small packet header which contains stuff like its stream ID (which should always be the same for all packets within the same file) and often a magic number. Immediately following is an optional packet context. This one usually contains even more stuff, like the packet size and content size in bits, the time interval covered by its events and so on.

Then: events, one after the other. How do we know when we reach the end of the packet? We just keep the current offset into the packet until it's equal to the content size defined into its context.

Events

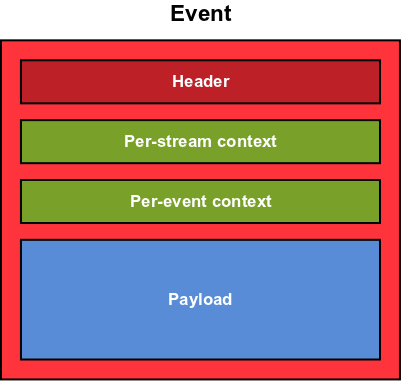

An event isn't just a bunch of payload bits. We have to know what type of event it is, and sometimes other things. Here's the structure of an event:

The event header contains the time stamp of the event and its ID. Knowing its ID, we know the payload structure.

Both contexts are optional. The per-stream context exists in all events of a stream if enabled. The per-event context exists in all events with a given ID (within a certain stream) if enabled. Thus, the per-stream context is enabled per stream and the per-event context is enabled per (stream, event type) pair.

Please note: there is no stream ID written anywhere in an event. This means that an event "outside" its packet is lost forever since we cannot know anything about it. This is not the case of a packet: since it has a stream ID field in its header (and the packet header structure is common for all packets of all streams of a trace), a packet is independent and could be cut and paste elsewhere without losing its identity.

Metadata file

The metadata file (must be named exactly metadata if stored on a filesystem according to the official CTF specification) describes all the trace structure using TSDL (Trace Stream Description Language). This means the CTF format is auto-described.

When "packetized" (like in the CTF structure image above), a metadata packet contains an absolutely defined metadata packet header (defined in the official specification) and no context. The metadata packet does not contain events: all its payload is a single text string. When concatening all the packets payloads, we get the final metadata text.

In its simpler version, the metadata file can be a plain text file containing only the metadata text. This file is still named metadata. It is valid and recognized by CTF readers. The way to differentiate the packetized from the plain text versions is that the former starts with a magic number which has "non text bytes" (control characters). In fact, it is the magic number field of the first packet's header. All the metadata packets have this required magic number.

CTF types

The CTF types are data types that may be specified in the metadata and written as binary data into various places of stream files. In fact, anything written in the stream files is described in the metadata and thus is a CTF type.

Valid types are the following:

- simple types

- integer number (any length)

- floating point number (any lengths for mandissa and exponent parts)

- strings (many character sets available)

- enumeration (mapping of string labels to ranges of integer numbers)

- compound types

- structure (collection of key/value entries, where the key is always a string)

- array (length fixed in the metadata)

- sequence (dynamic length using a linked integer)

- variant (placeholder for some other possible types according to the dynamic value of a linked enumeration)

JSON CTF

As a means to keep test traces in a portable and versionable format, a specific schema of JSON was developed in summer 2012. Its purpose is to be able to do the following:

with binary CTF traces A and B being binary identical (except for padding bits and the metadata file).

About JSON

JSON is a lightweight text format used to define complex objects, with only a few data types that are common to all file formats: objects (aka maps, hashes, dictionaries, property lists, key/value pairs) with ordered keys, arrays (aka lists), Unicode strings, integer and floating point numbers (no limitation on precision), booleans and null.

Here's a short example of a JSON object showing all the language features:

{ "firstName": "John", "lastName": "Smith", "age": 25, "male": true, "carType": null, "kids": [ "Moby Dick", "Mireille Tremblay", "John Smith II" ], "infos": { "address": { "streetAddress": "21 2nd Street", "city": "New York", "state": "NY", "postalCode": "10021" }, "phoneNumber": [ { "type": "home", "number": "212 555-1234" }, { "type": "fax", "number": "646 555-4567" } ] }, "balance": 3482.15, "lovesXML": false }

A JSON object always starts with {. The root object is unnamed. All keys are strings (must be quoted). Numbers may be negative. You may basically have any structure: array of arrays of objects containing objects and arrays.

Even big JSON files are easy to read, but a tree view can always be used for even more clarity.

Why not using XML, then? From the official JSON website:

- Simplicity: JSON is way simpler than XML and is easier to read for humans, too. Also: JSON has no "attributes" belonging to nodes.

- Extensibility: JSON is not extensible because it does not need to be. JSON is not a document markup language, so it is not necessary to define new tags or attributes to represent data in it.

- Interoperability: JSON has the same interoperability potential as XML.

- Openness: JSON is at least as open as XML, perhaps more so because it is not in the center of corporate/political standardization struggles.

In other words: why would you care closing a tag already opened with the same name in XML when it can be written only once in JSON? Compare both:

<cart user="john"> <item>asparagus</item> <item>pork</item> <item>bananas</item> </cart>

{ "user": "john", "items": [ "asparagus", "pork", "bananas" ] }

Schema

The "dictionary" approach of JSON objects makes it very convenient to store CTF structures since they are exactly that: ordered key/value pairs where the key is always a string. Arrays and sequences can be represented by JSON arrays (the dynamicity of CTF sequences is not needed in JSON since the closing ] indicates the end of the array, whereas CTF arrays/sequences must know the number of elements before starting to read). CTF integers and enumerations are represented as JSON integer numbers; CTF floating point numbers as a JSON object containing two integer numbers for mandissa/exponent parts (to keep precision that would be lost by using JSON floating point numbers).

To fully understand the developed JSON schema, we use the same subsection names as in Binary CTF anatomy.

Stream files

All streams of a JSON CTF trace fit into the same file, which by convention has a .json extension. So: a binary CTF trace is a directory whereas a JSON CTF trace is a single file. There is no such thing as a "stream file" in JSON CTF. The JSON file looks like this:

{ "metadata": "we will see this later", "packets": [ "next subsection" ] }

The packets node is an array of packet nodes which are ordered by first time stamp (this is found in their context).

Binary CTF stream files can still be rebuilt from a JSON CTF trace since a packet header contains its stream ID. It's just a matter of reading the packets objects in order, converting them to binary CTF, and appending the data to the appropriate files according to the stream ID and the CPU ID.

Packets

Packet nodes (which are elements of the aforementioned packets JSON array) look like this:

{ "header": { }, "context": { }, "events": [ "event nodes here" ] }

Of course, the header and context fields contain the appropriate structures.

The events node is an array of event nodes.

Here is a real world example:

{ "header": { "magic": 3254525889, "uuid": [30, 20, 218, 86, 124, 245, 157, 64, 183, 255, 186, 197, 61, 123, 11, 37], "stream_id": 0 }, "context": { "timestamp_begin": 1735904034660715, "timestamp_end": 1735915544006801, "events_discarded": 0, "content_size": 2096936, "packet_size": 2097152, "cpu_id": 0 }, "events": [ ] }

In this last example, event nodes are omitted to save on space. The key names of nodes header and context are the exact same ones that are declared into the metadata text.

The context node is optional and may be absent if there's no packet context.

Events

Event nodes (which are elements of the aforementioned events JSON array) have a structure that's easy to guess: header, optional per-stream context, optional per-event context and payload. Nodes are named like this:

{ "header": { }, "streamContext": { }, "eventContext": { }, "payload": { } }

Here is a real world example:

{ "header": { "id": 65535, "v": { "id": 34, "timestamp": 1735914866016283 } }, "payload": { "_comm": "lttng-consumerd", "_tid": 31536, "_delay": 0 } }

No context is used in this particular example. Again, the key names of nodes header, streamContext, eventContext and payload are the exact same ones that are declared into the metadata text.

Metadata file

Back to the JSON CTF root node. It contains two keys: metadata and packets. We already covered packets in section Packets. The metadata node is a single JSON string. It's either external:somefile.tsdl, in which case file somefile.tsdl must exist in the same directory and contain the whole metadata plain text, or the whole metadata text in a single string. The latter means all new lines and tabs must be escaped with \n and \t, for example.

Since the metadata text of a given trace may be huge (often several hundreds of kilobytes), it might be a good idea to make it external for human readability. However, if portability is the primary concern, having a single JSON text file is still possible using this technique.

There is never going to be a collision between the string external: and the beggining of a real TSDL metadata text since external is not a TSDL keyword.

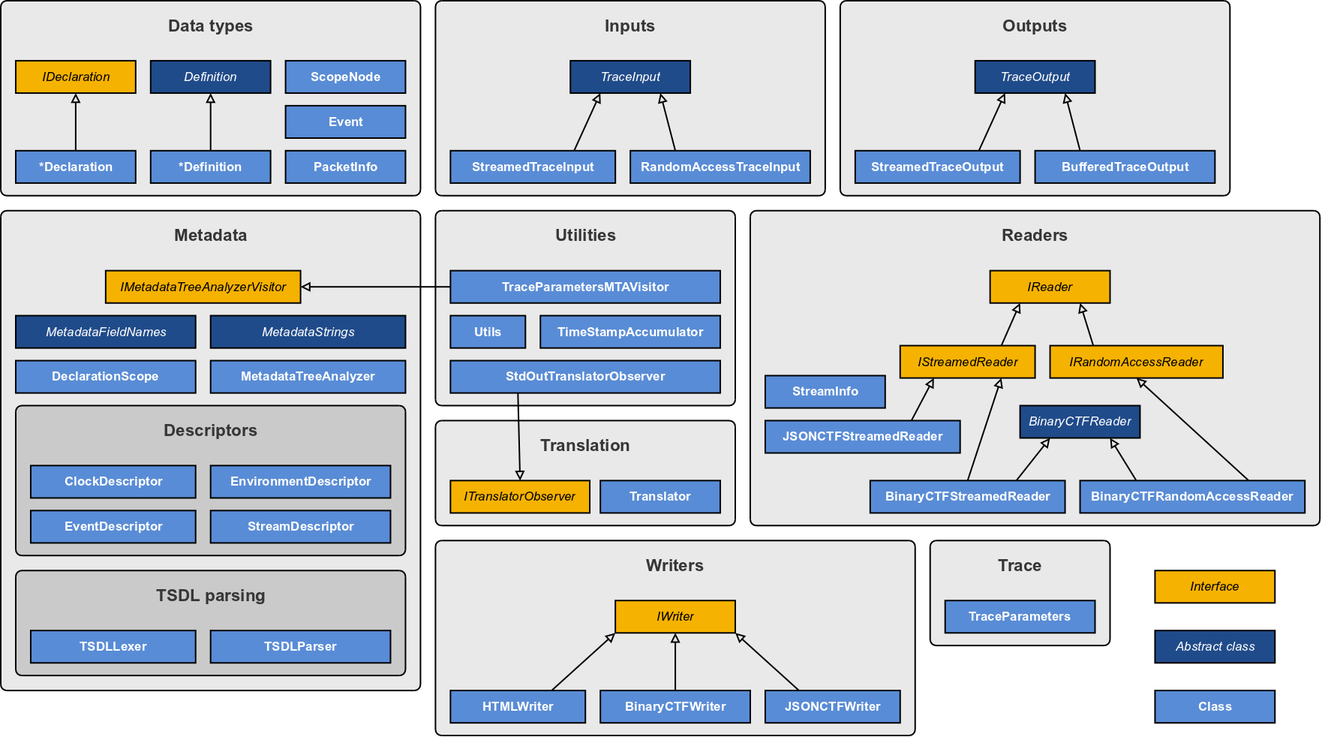

org.eclipse.linuxtools.ctf.core

This section describes the Java software architecture of org.eclipse.linuxtools.ctf.core. This package and all its subpackages contain code to read/write both binary CTF and JSON CTF formats, translate from one to another and support other input/output languages.

Please note up-to-date JavaDoc is also available for all classes, interfaces and enumerations here.

Architecture outline

Java classes are packaged in a way that makes it easier to discern the different, relatively independent parts. The following general class diagram shows the categories and their content:

Strictly speaking, the "TSDL parsing" subcategory of "Metadata" is not part of org.eclipse.linuxtools.ctf.core: it is located in org.eclipse.linuxtools.ctf.parser. However, the two classes The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/. are automatically generated by ANTLr and are not part of this article's content.

The following sections explain each category seen in the above diagram. The order in which they come is important.

Security

Since performance is the primary concern in this CTF decoding context, copy is avoided as much as possible and security wasn't taken into account during the implementation phase. This means developers must carefully follow the guidelines of this article in order to correctly operate the whole mechanism without breaking anything. For example, one is able to clear the payload structure of an The Scout documentation has been moved to https://eclipsescout.github.io/. object obtained while reading a trace, but this will create exceptions when reading the next event of the same type since those objects are reused and only valid between seeking calls. This is all explained in the following sections, but the rule of thumb is: do not assume what you get is a copy.

Deep copy constructors exist and work anyway for all data types: all simple/compound definitions, events and packet informations. It is the user's responsability to create deep copies when needed, at the expense of keeping maximum performance and minimum memory usage.

Data types

Data types refer to the actual useful data contained into traces. It is what the user gets when reading a trace and what he must provide when writing a trace.

CTF types

Specific types are omitted on the general class diagram since there are too much. The types are CTF types classes are analogous to the already described CTF types. All types have a declaration class and a definition class. The declaration contains declarative information; information that doesn't change from one type value to the other. A definition contains the actual type value, whatever it is. For example, an integer declaration contains its length in bits (found in the metadata text), while an integer definition contains its integer value. This way, multiple definitions may have a link to the same declaration reference.

All definitions have a link to a declaration reference. The primary purpose of a declaration is to create an empty definition. An integer declaration creates an empty integer definition, a structure declaration creates an empty structure definition, and so on. Once created, the definitions are ready to read, except for a few dynamic scoping details that are covered later in this article. This creational pattern is found in The Scout documentation has been moved to https://eclipsescout.github.io/., which all type declarations classes implement. Specific declarations contain specific declarative information.

The types are:

- simple types

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

- compound types

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

- The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.

If you only intend to read traces or translate them, you will never have to create definitions yourself: the reader you will use will take care of that. Creating definitions from scratch (bypassing the declarations) is a little painful and only useful when creating a synthetic trace from scratch. However, the prefered approach for this is to generate JSON CTF text using Java or any interpreter like Python and then translating to binary CTF using the facilities of org.eclipse.linuxtools.ctf.core.

All definitions inherit from the abstract class The Scout documentation has been moved to https://eclipsescout.github.io/.. This forces them to implement, amongst other methods, read(String, IReader) and write(String, IWriter). This is the entry point of genericity regarding input/ouput trace formats. Simple types will only call the The Scout documentation has been moved to https://eclipsescout.github.io/. or The Scout documentation has been moved to https://eclipsescout.github.io/. appropriate method, passing a reference to this. However, compound types may iterate over their items and recursively call their read/write methods.

Definitions must also implement copyOf() which returns a The Scout documentation has been moved to https://eclipsescout.github.io/.. This method creates a deep copy of this and is used by copy constructors of each definition to achieve proper deep copy without casting.

Scope nodes

Sequences are arrays of a specific CTF type with a dynamic length. The length is the value of "some integer somwhere". Basically, this integer value can be anywhere in the data already read, and its exact location is described in the metadata text. Without knowing the length prior to reading the sequence, we cannot know when to stop reading items since there's no "end of sequence" mark in binary CTF. In JSON CTF, it's another story: all arrays end with character ], so the dynamic length value is not really needed in this case. Here's a few places where the length could be:

- any already read integer of the current event payload

- any integer in the header, per-stream context or per-event context of the current event

- any integer in the header or the context of the current packet

Variants also need a dynamic value: they need the dynamic current label of a specific enumeration, which may be located in any of the aforementioned places. Using this label, the right CTF type can be selected amongst possible ones (since variants are just placeholders).

Knowing this, we see that sequences and variants need to get the current value of some definition before starting a read operation. This definition must be linked, and this binding is usually done at the time of opening a trace using scope nodes.

Scope nodes form the basis of a scope tree. A scope tree is a simple tree in which some nodes are linked to definitions. The definitions also have a reference of their scope node. Using this, a definition may query its scope node a path which will resolve to another scope node. Scope nodes know their parents and children, so traversing the tree is possible in both direction.

The purpose of the scope tree is to link sequences and variants with the correct reference, not getting the needed value by traversing it at each read. Once sequences and variants have a reference, they only need to get the current value prior to reading. Thus, tree traversing is only done once at trace opening time.

Example

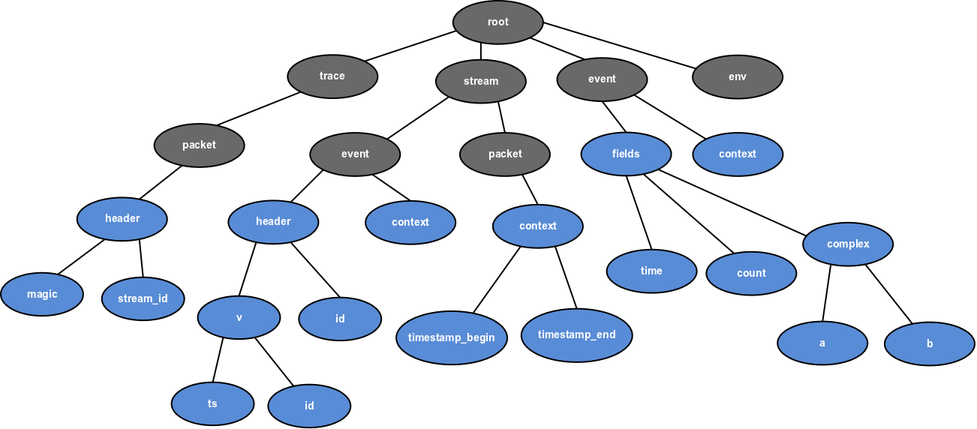

When opening the trace and building the events, the scope tree will usually also be built. For a specific event, the scope tree might look like this:

Blue nodes are the ones with a linked definition and grey ones have none (they only exist for organization and location).

The scope tree is built as the definitions are created. Paths are relative when they contain no dot and absolute when they have at least one. An absolute path starts with one of the second level labels: trace, stream, event or env. So, from the point of view of node b in the above diagram:

- relative path

ais equivalent to absolute pathevent.fields.complex.a - relative path

countis equivalent to absolute pathevent.fields.count - the current stream ID path is

trace.packet.header.stream_id(cannot be a reached with a relative path) - the path of the current starting time stamp of the current packet is

stream.packet.context.timestamp_begin(cannot be a reached with a relative path)

From the point of view of node v, relative path id is equivalent to absolute path stream.event.header.id.

Packet information

A packet information is a header and an optional context. It does not contain an array of events; events are read one at a time.

Class The Scout documentation has been moved to https://eclipsescout.github.io/. makes those two structures available thanks to methods getHeader() and getContext(). Since the packet context is optional, getContext() returns null if there is no context.

It is also possible to get the CPU ID, the first and last time stamps and the stream ID from the packet. Those values are read from the packet context (if exists) when calling updateCachedInfos(). If you are using any provided reader, do not care about this last method; when you ask for a packet information, the cached information will always be synchronized with the inner context structure. Caching this information avoids some tree traversal each time one of these values is desired (which may happen a lot in typical use cases). If you are developing a new reader, you need to manually call updateCachedInfos() everytime the packet information context structure is updated.

Make sure not to modify the header and context structures when getting references to them since no copy is created and the structures are reused afterwards when using the framework facilities. Instead, if you really need to modify or keep the original informations for a long time, make a packet information deep copy using the copy constructor.

Event

An event is analogous to a CTF event: header, optional per-stream and per-event contexts, payload. It also contains:

- its name (from the metadata text)

- the stream ID which it belongs to

- its own ID within that stream

- its computed real time stamp (in cycles)

Class The Scout documentation has been moved to https://eclipsescout.github.io/. makes all this available. Since both contexts are optional, getContext() and getStreamContext() return null if there is no context.

Make sure not to modify the inner structures when getting references to them since no copy is created and the structures are reused afterwards when using the framework facilities. Instead, if you really need to modify or keep the original informations for a long time, make an event deep copy using the copy constructor.

Trace parameters

The trace parameters represent the universal token for data exchange between lots of classes, especially inputs, outputs, readers and writers.

Those parameters are the following and are common to all CTF traces:

- metadata plain text

- version major number

- version minor number

- trace UUID

- trace default byte order

- map of names (string) to clock descriptors (The Scout documentation has been moved to https://eclipsescout.github.io/.)

- one environment descriptor (which is a map of name (string) to string or integer values; The Scout documentation has been moved to https://eclipsescout.github.io/.)

- map of stream IDs to stream descriptors (The Scout documentation has been moved to https://eclipsescout.github.io/.)

By all sharing the same trace parameters, the communication between the different parts of the library is reduced and thus classes are more decoupled.

With a normal use, the input is responsible for filling the trace parameters with what is found in the metadata text. Once a trace is opened, you can get the trace parameters using The Scout documentation has been moved to https://eclipsescout.github.io/.'s getTraceParameters().

Utilities

As it is the case with many projects, the CTF component of Linux Tools has its own set of utilities. Class The Scout documentation has been moved to https://eclipsescout.github.io/. includes several static methods to factorize some code. Other utilities have their own classes.

General utilities

Class The Scout documentation has been moved to https://eclipsescout.github.io/. has the following static methods used here and there:

- unsigned comparison of two Java

longintegers - byte array to The Scout documentation has been moved to https://eclipsescout.github.io/. object

- The Scout documentation has been moved to https://eclipsescout.github.io/. object to byte array

- creation of an unsigned The Scout documentation has been moved to https://eclipsescout.github.io/. with specific size, alignment, byte order and initial value

- creation of several specific The Scout documentation has been moved to https://eclipsescout.github.io/. (e.g. 32-bit (8-bit aligned), 8-bit (8-bit aligned), etc.)

- array of The Scout documentation has been moved to https://eclipsescout.github.io/. (bytes) from a UTF-8 string

Trace parameters MTA visitor

Class The Scout documentation has been moved to https://eclipsescout.github.io/. implements The Scout documentation has been moved to https://eclipsescout.github.io/. and binds the metadata tree analyzer (covered later) to the trace parameters.

Time stamp accumulator

How to compute the time stamp of a given event uses a special algorithm that is developed for LTTng. For the moment, this is the de facto method of computing it since LTTng is the only real tracer natively producing CTF traces.

Steps are not detailed here because class The Scout documentation has been moved to https://eclipsescout.github.io/. does it anyway. An instance of The Scout documentation has been moved to https://eclipsescout.github.io/. accepts an absolute time stamp. It also accepts a relative time stamp, after what the absolute time stamp is computed from the previous and new data. It can then return the current time stamp in cycles, seconds or Java The Scout documentation has been moved to https://eclipsescout.github.io/..

The time stamp accumulator is used by the readers when setting an The Scout documentation has been moved to https://eclipsescout.github.io/.'s real time stamp. It's also used by the translator when translating a range.

Metadata

As a reminder, the metadata text is written in TSDL and fully describes the structures of a CTF trace. A specific text exists for each individual trace.

Since TSDL is a formal domain-specific language, it is parsable. In this project, ANTLr is used to generate a lexer and a parser in Java from descriptions of tokens and the grammar of TSDL. The work done by those generated TSDL lexer and parser (The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/. in package org.eclipse.linuxtools.ctf.parser) is called the parsing stage and produces a metadata abstract syntax tree (AST).

A metadata AST is a tree containing all the tokens found into the metadata text. The tree structure makes it (relatively) easy to traverse and to analyze the semantics of the metadata (the syntax is okay, but does it make any sense?).

AST analysis

The The Scout documentation has been moved to https://eclipsescout.github.io/. class (MTA) implements the metadata analysis stage. The metadata AST is rigorously analyzed and higher-level data is extracted from it. This higher-level data is passed to a visitor. In fact, the visitor is not a real visitor (design-patternly speaking), but we couldn't find a better name. The Scout documentation has been moved to https://eclipsescout.github.io/. declares what needs to be in any MTA visitor.

The Scout documentation has been moved to https://eclipsescout.github.io/. only has one public method, and that is enough: analyze(). When building an instance of it, you pass to the constructor the only two things this analyzer needs: the metadata AST (an ANTLr class called The Scout documentation has been moved to https://eclipsescout.github.io/.) and the visitor. Then, when calling analyze(), the whole metadata AST will be traversed and, as useful data is found, the appropriate visitor methods will be called with it.

Here are a few methods of The Scout documentation has been moved to https://eclipsescout.github.io/. just so you get it:

public void addClock(String name, UUID uuid, String description, Long freq, Long offset); public void addEnvProperty(String key, String value); public void addEnvProperty(String key, Long value); public void setTrace(Integer major, Integer minor, UUID uuid, ByteOrder bo, StructDeclaration packetHeaderDecl); public void addStream(Integer id, StructDeclaration eventHeaderDecl, StructDeclaration eventContextDecl, StructDeclaration packetContextDecl); public void addEvent(Integer id, Integer streamID, String name, StructDeclaration context, StructDeclaration fields, Integer logLevel);

This interface also features lots of info*() methods which are called with the current analyzed node for progression information. No useful data is to be read within these methods.

Utility class The Scout documentation has been moved to https://eclipsescout.github.io/. implements The Scout documentation has been moved to https://eclipsescout.github.io/. and acts as a bridge between the metadata tree analyzer and trace parameters. As it is called by the MTA, it fills a The Scout documentation has been moved to https://eclipsescout.github.io/. object with the information.

The MTA also checks the semantics as it traverses and analyzes the metadata AST. For example, it will throw an exception with the line number if it finds two trace blocks, two structure fields with the same key name, an attribute that's unknown for a certain block type, etc. However, it won't throw exceptions in the following situations:

- multiple clocks with the same name

- multiple environment variables with the same name

- multiple streams with the same ID

- multiple events with the same ID

This must be checked or ignored by the visitor.

Strings

Anywhere in the library where a string token part of TSDL is needed, taking it from abstract class The Scout documentation has been moved to https://eclipsescout.github.io/. is prefered. For field names that are common to all metadata texts, The Scout documentation has been moved to https://eclipsescout.github.io/. can be used (e.g. timestamp_begin for packet contexts).

Reading

Readers are those objects parsing some CTF subformat and filling The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/. structures. Readers are not, however, used directly by a user wanting to read a trace. They could, but the outer procedures (mainly during the opening stage) needed prior to using any reader reside in trace inputs. Trace inputs are the interfaces (software interfaces, not Java interfaces) between a user and a reader. They are discussed in section Trace input and output.

During the design phase of this generic CTF Java library, two master use cases were identified regarding reading: streaming and random access. The following two sections explain the difference between both and in what situations a user needs one or the other.

Both types of readers have their own interface which inherits from The Scout documentation has been moved to https://eclipsescout.github.io/.. The shared methods are worth mentioning here:

public void openTrace() throws ReaderException; public void closeTrace() throws ReaderException; public String getMetadataText() throws ReaderException; public void openStreams(TraceParameters params) throws ReaderException; public void closeStreams() throws ReaderException; public void openStruct(StructDefinition def, String name) throws ReaderException; public void closeStruct(StructDefinition def, String name) throws ReaderException; public void openVariant(VariantDefinition def, String name) throws ReaderException; public void closeVariant(VariantDefinition def, String name) throws ReaderException; public void openArray(ArrayDefinition def, String name) throws ReaderException; public void closeArray(ArrayDefinition def, String name) throws ReaderException; public void openSequence(SequenceDefinition def, String name) throws ReaderException; public void closeSequence(SequenceDefinition def, String name) throws ReaderException; public void readInteger(IntegerDefinition def, String name) throws ReaderException; public void readFloat(FloatDefinition def, String name) throws ReaderException; public void readEnum(EnumDefinition def, String name) throws ReaderException; public void readString(StringDefinition def, String name) throws ReaderException;

What you see first is that all readers, be them streamed or random access, must be able to open the trace and close it (i.e. getting and releasing resources). They must also know how to get the complete metadata text. For binary CTF, this means concatening all text packets of the metadata file as seen previously. For JSON CTF, simply return the whole content of the external metadata file pointed to by the metadata node or the whole node text if the metadata text is embedded.

Once the reader owner (usually a trace input) has the metadata text, it can parse it and use a metadata tree analyzer to fill trace parameters. This is why those trace parameters only come to the reader afterwards, when opening all its streams. The information about streams to open is located into the map of The Scout documentation has been moved to https://eclipsescout.github.io/. inside The Scout documentation has been moved to https://eclipsescout.github.io/..

Now all readers must be able to read all CTF types. As you may notice in the previous declarations, compound types have open* and close* methods while simple types only have read* methods. This is because the real data is never a compound type. A structure may have 3 embedded arrays of structures containing integers, but all this organization is to be able to find said integers, because they have the values we need.

A compound CTF type, has the following reading algorithm:

- call the appropriate reader's

open*method - for each contained definition (extends The Scout documentation has been moved to https://eclipsescout.github.io/.), in order

- call its

read(String, IReader)

- call its

- call the appropriate reader's

close*method

A simple CTF type has the following reading algorithm:

- call the appropriate reader's

read*method

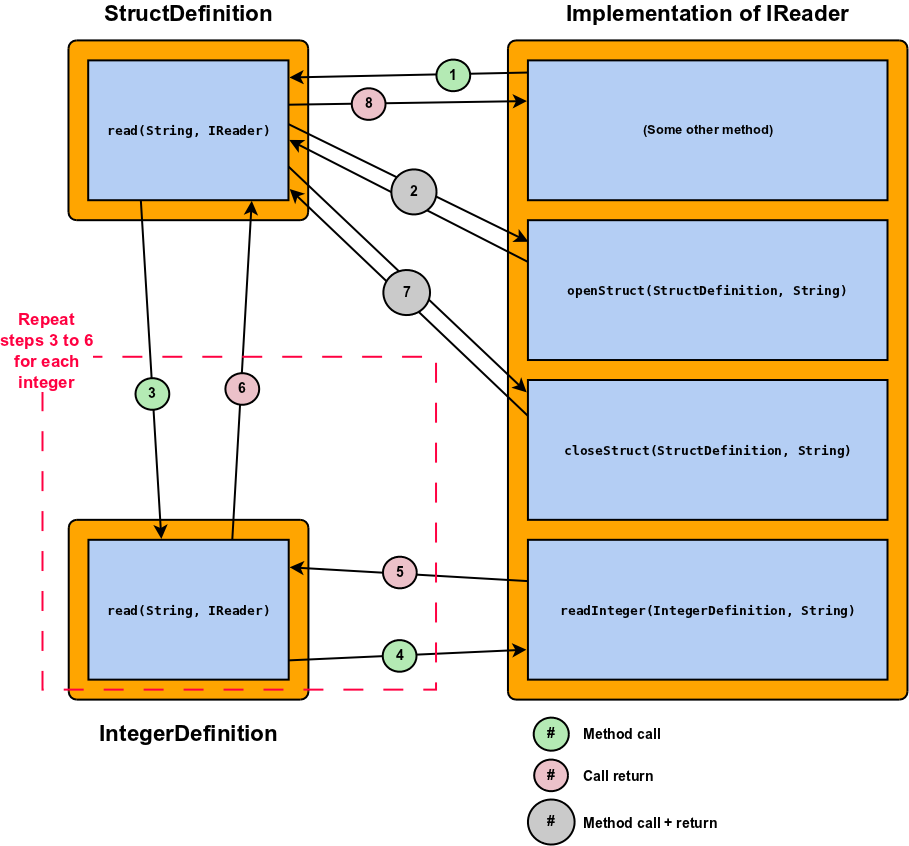

Here is a sequence diagram showing a structure of integers being read:

The initial call to StructureDefinition.read(String, IReader) is from another method in the reader. This might be, for example, from a method reading the context of a packet. In this case, the reader has a reference to the packet context's The Scout documentation has been moved to https://eclipsescout.github.io/. and will call:

packetContextDefinition.read(null, this);

When this call returns, all the sequence shown in the above diagram will have been executed, with underlying method calls to the same reader.

The String parameters everywhere are for exchanging the field names. Only The Scout documentation has been moved to https://eclipsescout.github.io/.s should have their read(String, IReader method called directly by a reader. The reading methods of other types will be called because they are eventual children of a structure. This is why the initial direct call passes null as the field name: a packet context or an event header, for example, do not have any name. In other words, they are not part of a parent structure. They could always be called context and header, but this is useless because those names are absolutely known and part of the TSDL semantics anyway.

Now, on with the above sequence diagram. Suppose we are a reader and need to read a structure definition myStructure containing the following ordered integers: a, b and c.

Step 1 is the following method call:

myStructure.read(null, this);

Step 2: the structure definition calls our openStructure(StructDefinition, String) method. Received parameters are the reference to this structure (equivalent to myStructure here) and null. Here, we do whatever we need to open the structure. For example:

- binary CTF: align our current bit buffer to the structure alignment value

- JSON CTF: the JSON object node corresponding to this structure is pushed as the current JSON context node

Steps 3: the integer definition corresponding to name a has its read(String, IReader) called (first parameter is a, second is the same reference to the reader that we are).

Step 4: this integer definition calls our readInteger(IntegerDefinition, String), passing this and a. Now we have to read an integer value and set the definition's current value to it. Examples:

- binary CTF: use the current bit buffer to read the integer value

- JSON CTF: search the

anode (within the current context node) and read its value (convert text number to integer value)

Steps 5 and 6 are just call returns. Steps 3 to 6 are repeated for definitions b and c.

Step 7: the structure definition calls our closeStructure(StructDefinition, String) method. Received parameters are the reference to this structure (equivalent to myStructure here) and null. Here, we do whatever we need to close the structure. For example:

- binary CTF: do absolutely nothing

- JSON CTF: pop the current context node

Step 8: back to where we were at step 1.

All this mechanism might seem bloated, but a choice had to be made: do we browse the items of compound types in the readers or in the compound types definitions? If we decide to browse them in the readers, this means all the types do not need read/write methods. However, this browsing needs to be repeated in every reader. The chosen technique seems like the most generic one. Of course this last example is very simple; the whole approach is much more appreciated when we have to deal with arrays of structures containing 2-3 levels of other compound types. Implementing a new reader becomes easier because you don't have to think about recursivity: it is managed by the architecture.

Streamed reading

Streamed reading means reading the resources forward and never having to go back, whatever those resources are. For binary CTF, they are stream files. For JSON CTF, the single JSON file. We could think about a network reader which receives CTF packets and, once the application is done with their content, dumps them. In this case, no backward seeking is needed.

Interface The Scout documentation has been moved to https://eclipsescout.github.io/. only adds 4 methods to The Scout documentation has been moved to https://eclipsescout.github.io/.:

public PacketInfo getCurrentPacketInfo() throws ReaderException; public void nextPacket() throws ReaderException, NoMorePacketsException; public Event getCurrentPacketEvent() throws ReaderException; public void nextPacketEvent() throws ReaderException, NoMoreEventsException;

In a streamed reader, you don't read all events of a trace: you read all packets of a trace and, for each packet, all contained events. This means events do not come in order of time stamp, but packets must do.

Method getCurrentPacketInfo() must return null if there is no more packets. If the initial call to getCurrentPacketInfo() returns null, this means the trace is empty (does not have a single packet). If getCurrentPacketInfo() returns a The Scout documentation has been moved to https://eclipsescout.github.io/. instance, this packet is the current one (hence "current packet info"). The values in this object are good until the next call to nextPacket(), which seeks to the next packet. Do not call nextPacket() if getCurrentPacketInfo() returns null; you will get a The Scout documentation has been moved to https://eclipsescout.github.io/.. If, as a reader user, you want to keep a The Scout documentation has been moved to https://eclipsescout.github.io/. object for a long time (being able to call nextPacket() again without modifying it), you need to get a deep copy of it using the its copy constructor:

PacketInfo myCopy = new PacketInfo(referenceToThePacketInfoComingFromTheReader);

Method getCurrentPacketEvent() returns the current event of the current packet. The current packet is the one represented by the The Scout documentation has been moved to https://eclipsescout.github.io/. returned by getCurrentPacketInfo(). This means that as soon as a reader opens its streams, getCurrentPacketEvent() should return the first event of the first packet. If, at any moment, getCurrentPacketEvent() returns null, it means the current packet has no more events. If this happens right after a call to nextPacket(), it means the current packet has no events at all. But it could also mean that there's no such current packet (end of trace reached). Use getCurrentPacketInfo() first to be sure.

This way of reading packets and events at two separate levels enables easy translation between formats. Since a packet is the biggest unit of data in CTF, there must exist a way to read one exactly as it is in the trace. This reader behavior is not suitable for all situations. If you want the reader to read events in order of time stamp and do not care about packets, use a random access reader.

The JSON CTF streamed reader, The Scout documentation has been moved to https://eclipsescout.github.io/., uses Jackson, a high-performance JSON processor, to parse the JSON CTF file. Jackson has the advantage of providing two combinable ways of reading JSON: streaming (getting one token at a time) and a tree model. Since JSON CTF files can rapidly grow big, the streaming method is used as much as possible. Only small nodes (e.g. event nodes) are converted to a tree model to render traversing easier. Using a tree model, members of CTF structures do not have to be in any particular order, as long as they keep the same names (keys). This is because, contrary to streamed parsing, random access to any node is possible using a tree model. This feature improves human editing of JSON CTF files.

Random access reading

Random access readers are used whenever a user wants to read events by order of time stamp (as they were recorded) or seek at a specific time location in the trace. Reading events in order of time stamp means the current event may be in packet A and the next one will be in packet B. This is because tracers may record with multiple CPUs at the same time and produce packets with interlaced events.

The Scout documentation has been moved to https://eclipsescout.github.io/. adds the following methods to The Scout documentation has been moved to https://eclipsescout.github.io/.:

public Event getCurrentEvent() throws ReaderException; public void advance() throws ReaderException; public void seek(long ts) throws ReaderException;

Whereas you must have two loops (read all packets, and for each packet, read all events) with a streamed reader, you only need one with a random access reader:

while (myRandomAccessReader.getCurrentEvent() != null) { Event ev = myRandomAccessReader.getCurrentEvent(); // Do whatever you want with the data of ev myRandomAccessReader.advance(); }

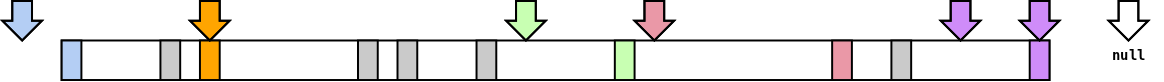

The seek(long) method seeks at a specific time stamp. Here, just like in all places of this library where a long time stamp is required, the unit is CTF clock cycles. The event selection mechanism when seeking at a time stamp is shown by the following diagram:

On the above diagram, the large rectangle represents the whole trace and small blocks are events. Arrows are actions of seeking. An event selected by a seeking operation has the same color. The rule is:

- seek before the first event time stamp: current event is the first event of the trace

- seek at any time stamp between the first and last events of the trace (inclusive): current event is the next existing one with time stamp greater or equal to query

- seek after the last event of the trace: current event is

null(end of trace reached)

You'll notice you cannot get any information about the current packet with this interface. In fact, packets are useless when your only use case is to get events in recorded order and seek at specific time locations. Packets are used when translating from one format to another, to get the same exact content structure. For analysis and monitoring purposes, a random access reader is much more useful.

Since random access reading is the main use case of TMF regarding the CTF component, a binary CTF random access reader is implemented as The Scout documentation has been moved to https://eclipsescout.github.io/.. Because many algorithms are shared between The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/., they both extend The Scout documentation has been moved to https://eclipsescout.github.io/., an abstract class.

There is no JSON CTF random access reader. The only purposes of JSON CTF are universal data exchange for small traces, easy human editing and the possibility to track the content changes of sample traces in revision control systems. The workflow is to translate a small range of a native binary CTF trace to JSON CTF, modify it to exercise some parts of TMF, store it and convert it back to binary CTF when time comes to test the framework. The only JSON CTF reader needed by all those operations is a streamed one.

Writing

With Linux Tools' CTF component, it is also possible to write CTF traces. This is needed in order to translate from one CTF subformat to another (mostly JSON CTF to binary CTF and vice versa).

Writing is not as complex as reading because it only presents a single interface. If you're going to write something from scratch, there's no such thing as "random access writing", and we don't have appending/prepending use cases here. Writers only need to implement The Scout documentation has been moved to https://eclipsescout.github.io/.:

public interface IWriter { public void openTrace(TraceParameters params) throws WriterException; public void closeTrace() throws WriterException; public void openStream(int id) throws WriterException; public void closeStream(int id) throws WriterException; public void openPacket(PacketInfo packet) throws WriterException; public void closePacket(PacketInfo packet) throws WriterException; public void writeEvent(Event ev) throws WriterException; // Methods for CTF types not shown }

The openTrace() method will usually do a few validations, like checking if the output directory/file exists and so on. In its simplest form, openTrace() and closeTrace() do absolutely nothing. The writer owner also passes valid trace parameters to openTrace() so that the writer may use them as soon as possible. Since trace parameters contain the whole metadata text, the metadata may already be outputted at this stage.

Following the empty trace will be calls to openStream(int) for each stream found into the metadata. The writer allocates all needed resources at this stage.

The actual writing of data comes in the following order:

- open a packet (

openPacket(PacketInfo)): packet header and context (if exists) will probably be written here (the stream ID can be retrieved from the packet info if needed for output purposes) - write events (

writeEvent(Event)): this is called multiple times between packet opening and closing; all calls towriteEvent(Event)mean the events belong to the last opened packet - close the packet (

closePacket(PacketInfo)): not needed by all formats

Just like class The Scout documentation has been moved to https://eclipsescout.github.io/. has no reading method, it doesn't have any writing method: what to write of an event is chosen by the writer. If a complete representation of a CTF event is wanted, the header, optional contexts and payload must be written. But a writer could also only dump the headers, in which case it will ignore the other structures.

Writing CTF types is fully analogous to reading them (see Reading): the writer calls the write(String, IWriter) of a structure to be written, passing null as the name and this as the writer.

Provided writers

A few writers are already implemented and shipped with the library. The following list describes them.

- The Scout documentation has been moved to https://eclipsescout.github.io/. is able to write a valid binary CTF trace, that is a directory containing a packetized

metadatafile and some stream files. - The Scout documentation has been moved to https://eclipsescout.github.io/. is a JSON CTF writer. It produces native JSON and doesn't have any external dependency like the JSON CTF reader.

- The Scout documentation has been moved to https://eclipsescout.github.io/. was written as a proof of concept that coding a writer is easy, but could still be useful. It outputs one HTML page per packet, each one containing divisions for all events with all their structures.

- The Scout documentation has been moved to https://eclipsescout.github.io/. is a very simple writer that only textually outputs the cycle numbers of all received events. This was created mainly to test The Scout documentation has been moved to https://eclipsescout.github.io/. but is still shipped for debug and learning purposes.

Trace input and output

Since a user may first close a trace, then open it, then close a packet and read an integer using a reader, some sort of state machine is needed to know what is allowed and what's not. As we don't want to repeat this in every reader, users about to read/write a trace must use a trace input/output. Those classes, The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/., are the owners of readers and writers. They comprise a rudimentary state machine that checks if a trace is opened before getting an event, opened before getting closed, etc. When creating one of those, you always register an existing reader or writer.

Input

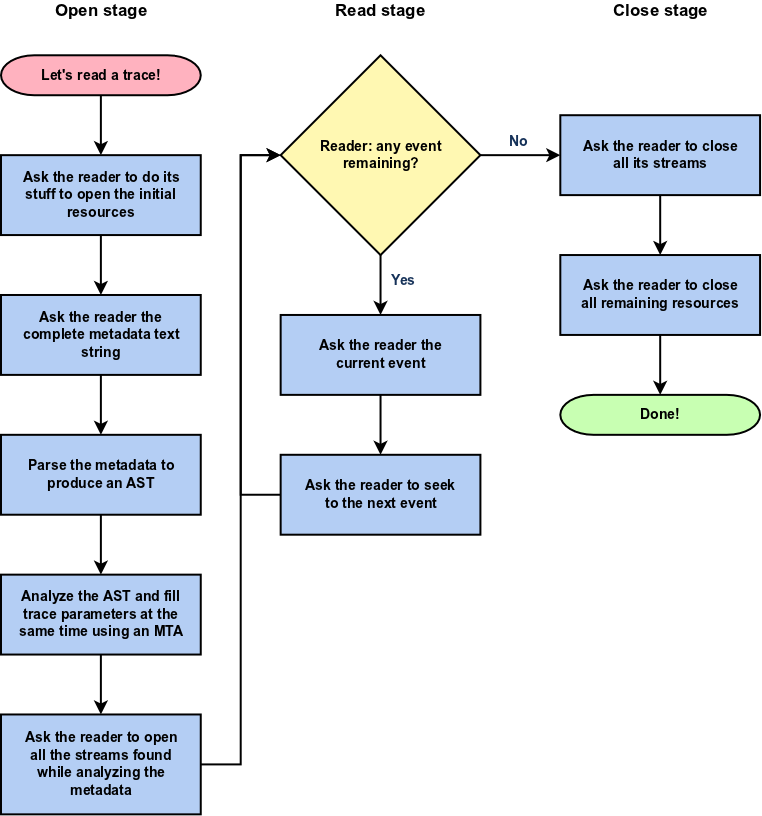

Here is the ultimate trace input flowchart:

Its job is to hide to the user the repeating process of opening a trace, getting its metadata text, parsing it, analyzing its metadata AST, filling trace parameters and opening streams shared by all readers.

The Scout documentation has been moved to https://eclipsescout.github.io/. is in fact an abstract class. Since we have two reader interfaces, we need two concrete trace inputs: The Scout documentation has been moved to https://eclipsescout.github.io/. uses a The Scout documentation has been moved to https://eclipsescout.github.io/. reader while The Scout documentation has been moved to https://eclipsescout.github.io/. uses a The Scout documentation has been moved to https://eclipsescout.github.io/. one. Afterwards, using one of those trace inputs is very easy.

Methods shared by both versions are pretty straightforward:

public void open() throws WrongStateException, TraceInputException; public void close() throws WrongStateException, TraceInputException; public int getNbStreams() throws WrongStateException; public ArrayList<Integer> getStreamsIDs() throws WrongStateException; public TraceParameters getTraceParameters() throws WrongStateException;

A reference to a streamed/random access reader is given at construction time to a streamed/random access trace input. Before doing anything useful, you must open a trace. This will issue a background call to the registered reader's openTrace() method. Then, it is possible to get the number of streams, get all the stream IDs or get the correct trace parameters. As usual, do not modify the trace parameters reference returned by getTraceParameters(): no copy is performed and this shared object is needed by lots of underlying blocks.

Streamed

The streamed trace input adds the following public methods to The Scout documentation has been moved to https://eclipsescout.github.io/.:

public PacketInfo getCurrentPacketInfo() throws WrongStateException, TraceInputException; public void nextPacket() throws WrongStateException, TraceInputException; public Event getCurrentPacketEvent() throws WrongStateException, TraceInputException; public void nextPacketEvent() throws WrongStateException, TraceInputException;

Those are analogous to the streamed reader ones, except you cannot call them if the trace is not opened first. No copy of events and packets info will be performed by the trace input, so refer to section Reading to understand how long the reference data remains valid.

Random access

The random access input adds the following public methods to The Scout documentation has been moved to https://eclipsescout.github.io/.:

public Event getCurrentEvent() throws WrongStateException, TraceInputException; public void advance() throws WrongStateException, TraceInputException; public void seek(long ts) throws WrongStateException, TraceInputException;

Again, see Random access reading to understand these.

Output

It turns out that The Scout documentation has been moved to https://eclipsescout.github.io/. is also an abstract class. Extending classes are The Scout documentation has been moved to https://eclipsescout.github.io/. and The Scout documentation has been moved to https://eclipsescout.github.io/.. Both can use any implementation of The Scout documentation has been moved to https://eclipsescout.github.io/.. The only difference is that The Scout documentation has been moved to https://eclipsescout.github.io/. is more intelligent. By copying all received events into a temporary buffer, it is able to modify the packet context prior to dumping all this to the owned writer. This is used to shrink a packet size when the initial size is too large, or conversely to expand it if too many events were written to the current packet. This makes it possible to not care about the packet and content sizes if events are removed/added from/to a packet. This feature is useful when manually modifying a JSON CTF packet (adding/removing events) and going back to binary CTF. If using a buffered trace output, you don't have to also manually edit the packet sizes in the JSON CTF trace. The streamed version immediately calls the underlying writer, making it possible to introduce serious errors.

Translation

All the previously described generic software architecture enables a very interesting scope: translating. Translating means going from one CTF format to another automatically. To do this, class The Scout documentation has been moved to https://eclipsescout.github.io/. is shipped.

The job of the translator is very easy: it takes an opened streamed trace input and an opened buffered trace output, then reads packets and events from the input side and gives them to the output side. From a user's point of view, you only need to create the input/output and call translate() of The Scout documentation has been moved to https://eclipsescout.github.io/.. To follow the progress, you may implement The Scout documentation has been moved to https://eclipsescout.github.io/. and set it as an observer to the translator. The translator observer interface looks like this:

public interface ITranslatorObserver { public void notifyStart(); public void notifyNewPacket(PacketInfo packetInfo); public void notifyNewEvent(Event ev); public void notifyStop(); }

A basic translator observer (outputs to standard output) is shipped as an utility: The Scout documentation has been moved to https://eclipsescout.github.io/..

If you call translate() without any parameter, the whole input trace will be translated. Should you need to translate only a range, you may call translate(long, long). This version will translate all the events between two time stamps and discard any event or packet outside that range. Packet sizes and event time stamps are automatically fixed when translating a range.

Examples

For some people, including the original author of this article, there's nothing better than a few examples to learn a new framework/library/architecture. This section lists a few simple examples (Java snippets) that might be just what you need to get started with Linux Tools' CTF component.

Reading a binary CTF trace (order of events)

This example reads a complete binary CTF trace in order of events (time stamps). For each one, its content is outputted using the default data types toString().

package examples; import org.eclipse.linuxtools.ctf.core.trace.data.Event; import org.eclipse.linuxtools.ctf.core.trace.input.RandomAccessTraceInput; import org.eclipse.linuxtools.ctf.core.trace.input.ex.TraceInputException; import org.eclipse.linuxtools.ctf.core.trace.reader.BinaryCTFRandomAccessReader; public class Example { public static void main(String[] args) throws Exception { // Create the random access reader BinaryCTFRandomAccessReader reader = new BinaryCTFRandomAccessReader("/path/to/trace/directory"); // Use this reader with a random access trace input RandomAccessTraceInput input = new RandomAccessTraceInput(reader); try { // Open the input input.open(); // Main loop while (input.hasMoreEvents()) { // Get current event Event e = input.getCurrentEvent(); // Print it (default debug format; similar to JSON) System.out.println(e); // Seek to the next event input.seekNextEvent(); } // Close the input input.close(); } catch (TraceInputException e) { // Something bad happened... e.printStackTrace(); } } }