Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

Difference between revisions of "Linux Tools Project/TMF/CTF guide"

m (→Packets) |

m (→Events) |

||

| Line 57: | Line 57: | ||

Both contexts are optional. The '''per-stream context''' exists in all events of a stream if enabled. The '''per-event context''' exists in all events with a given ID (within a certain stream) if enabled. Thus, the per-stream context is enabled per stream and the per-event context is enabled per (stream, event type) pair. | Both contexts are optional. The '''per-stream context''' exists in all events of a stream if enabled. The '''per-event context''' exists in all events with a given ID (within a certain stream) if enabled. Thus, the per-stream context is enabled per stream and the per-event context is enabled per (stream, event type) pair. | ||

| − | Please note: there is no stream ID written anywhere in an event. This means that an event "outside" its packet is lost forever since we cannot know anything about it. This is not the case of a packet: since it has a stream ID field in its header, a packet is independent and could be cut and paste elsewhere. | + | Please note: there is no stream ID written anywhere in an event. This means that an event "outside" its packet is lost forever since we cannot know anything about it. This is not the case of a packet: since it has a stream ID field in its header, a packet is independent and could be cut and paste elsewhere without losing its identity. |

===Metadata file=== | ===Metadata file=== | ||

Revision as of 23:37, 21 August 2012

This article is not finished. Please do not modify it until this label is removed by its original author. Thank you.

| Linux Tools | |

| Website | |

| Download | |

| Community | |

| Mailing List • Forums • IRC • mattermost | |

| Issues | |

| Open • Help Wanted • Bug Day | |

| Contribute | |

| Browse Source |

This article is a guide about using the CTF component of Linux Tools (org.eclipse.linuxtools.ctf.core). It targets both users (of the existing code) and developers (intending to extend the existing code).

Contents

CTF general information

This section discusses the CTF format.

What is CTF?

CTF (Common Trace Format) is a generic trace binary format defined and standardized by EfficiOS. Although EfficiOS is the company maintaining LTTng (LTTng is using CTF as its sole output format), CTF was designed as a general purpose format to accommodate basically any tracer (be it software/hardware, embedded/server, etc.).

CTF was designed to be very efficient to produce, albeit rather difficult to decode, mostly due to the metadata parsing stage and dynamic scoping support.

We distinguish two flavours of CTF in the next sections: binary CTF refers to the official binary representation of a CTF trace and JSON CTF is a plain text equivalent of the same data.

Binary CTF anatomy

This article does not cover the full specification of binary CTF; the official specification already does this.

Basically, the purpose of a trace is to record events. A binary CTF trace, like any other trace, is thus a collection of events data.

Here is a binary CTF trace:

Stream files

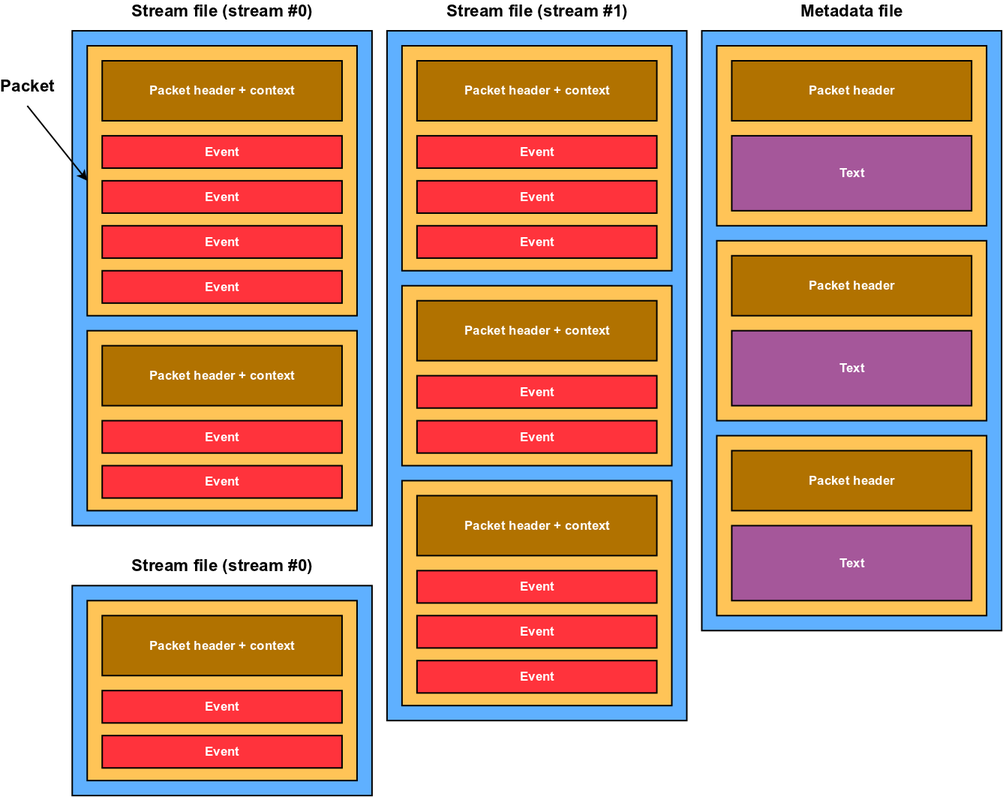

A trace is divided into streams, which may span over multiple stream files. A trace also includes a metadata file which is covered later.

There can be any number of streams, as long as they have different IDs. However, in most cases (at least at the time of writing this article), there is only one stream, which is divided into one file per CPU. Since different CPUs can generate different events at the same time, LTTng (the only tracer known to produce a CTF output) splits its only stream into multiple files. Please note: a single file cannot contain multiple streams.

In the image above, we see 3 stream files: 2 for stream with ID 0 and a single one for stream with ID 1.

A stream "contains" packets. This relation can be seen the other way around: packets contain a stream ID. A stream file contains nothing else than packets (no bytes before, between or after packets).

Packets

A packet is the main container of events. Events data cannot reside outside packets. Sometimes a packet may contain only one event, but it's still inside a packet.

Every packet starts with a small packet header which contains stuff like its stream ID (which should always be the same for all packets within the same file) and often a magic number. Immediately following is an optional packet context. This one usually contains even more stuff, like the packet size and content size in bits, the time interval covered by its events and so on.

Then: events, one after the other. How do we know when we reach the end of the packet? We just keep the current offset into the packet until it's equal to the content size defined into its context.

Events

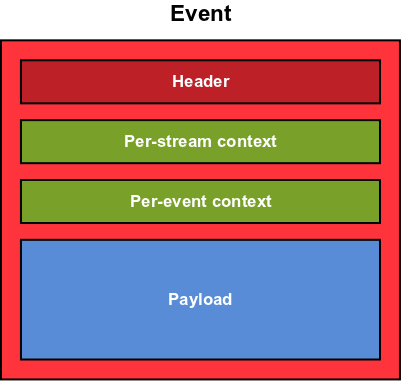

An event isn't just a bunch of payload bits. We have to know what type of event it is, and sometimes other things. Here's the structure of an event:

The event header contains the time stamp of the event and its ID. Knowing its ID, we know the payload structure.

Both contexts are optional. The per-stream context exists in all events of a stream if enabled. The per-event context exists in all events with a given ID (within a certain stream) if enabled. Thus, the per-stream context is enabled per stream and the per-event context is enabled per (stream, event type) pair.

Please note: there is no stream ID written anywhere in an event. This means that an event "outside" its packet is lost forever since we cannot know anything about it. This is not the case of a packet: since it has a stream ID field in its header, a packet is independent and could be cut and paste elsewhere without losing its identity.

Metadata file

The metadata file (must be named exactly metadata if stored on a filesystem) describes all the trace structure using TSDL (Trace Stream Description Language). This means the CTF format is auto-described.

When "packetized" (like in the CTF structure image above), a metadata packet contains an absolutely defined metadata packet header (defined in the official specification) and no context. The metadata packet does not contain events: all its payload is a single text string. When concatening all the packets payloads, we get the final metadata text.

In its simpler version, the metadata file can be a plain text file containing only the metadata text. This file is still named metadata. It is valid and recognized by CTF readers. The way to differentiate the packetized from the plain text version is that the former starts with a magic number which has "non text bytes". In fact, it is the magic number field of the first packet's header. All the metadata packets have this required magic number.

CTF types

The CTF types are data types that may be specified in the metadata and written as binary data into various places of stream files. In fact, anything written in the stream files is described in the metadata and thus is a CTF type.

Valid types are the following:

- simple types

- integer number (any length)

- floating point number (any lengths for mandissa and exponent parts)

- strings (many character sets available)

- enumeration (mapping of string labels to ranges of integer numbers)

- compound types

- structure (collection of key/value entries, where the key is always a string)

- array (length fixed in the metadata)

- sequence (dynamic length using a linked integer)

- variant (placeholder for some other possible types according to the dynamic value of a linked enumeration)

JSON CTF

As a means to keep test traces in a portable and versionable format, a specific schema of JSON was developed in summer 2012. Its purpose is to be able to do the following:

with binary CTF traces A and B being binary identical (except for padding bits and the metadata file).

About JSON

JSON is a lightweight text format used to define complex objets, with only a few data types that are common to all file formats: objects (aka maps, hashes, dictionaries, property lists, key/value pairs) with ordered keys, arrays (aka lists), Unicode strings, integer and floating point numbers (no limitation on precision), booleans and null.

Here's a short example of a JSON object showing all the language features:

{ "firstName": "John", "lastName": "Smith", "age": 25, "male": true, "carType": null, "kids": [ "Moby Dick", "Mireille Tremblay", "John Smith II" ], "infos": { "address": { "streetAddress": "21 2nd Street", "city": "New York", "state": "NY", "postalCode": "10021" }, "phoneNumber": [ { "type": "home", "number": "212 555-1234" }, { "type": "fax", "number": "646 555-4567" } ] }, "balance": 3482.15, "lovesXML": false }

A JSON object always starts with {. The root object is unnamed. All keys are strings (must be quoted). Numbers may be negative. You may basically have any structure: array of arrays of objects containing objects and arrays.

Even big JSON files are easy to read, but a tree view can always be used for even more clarity.

Why not using XML, then? From the official JSON website:

- Simplicity: JSON is way simpler than XML and is easier to read for humans, too. Also: JSON has no "attributes" belonging to nodes.

- Extensibility: JSON is not extensible because it does not need to be. JSON is not a document markup language, so it is not necessary to define new tags or attributes to represent data in it.

- Interoperability: JSON has the same interoperability potential as XML.

- Openness: JSON is at least as open as XML, perhaps more so because it is not in the center of corporate/political standardization struggles.

In other words: why would you care closing a tag already opened with the same name in XML while it can be written only once in JSON? Compare both:

<cart user="john"> <item>asparagus</item> <item>pork</item> <item>bananas</item> </cart>

{ "user": "john", "items": [ "asparagus", "pork", "bananas" ] }

Schema

The "dictionary" approach of JSON objects makes it very convenient to store CTF structures since they are exactly that: ordered key/value pairs where the key is always a string. Arrays and sequences can be represented by JSON arrays (the dynamicity of CTF sequences is not needed in JSON since the closing ] indicates the end of the array, whereas CTF arrays/sequences must know the number of elements before starting to read). CTF integers and enumerations are represented as JSON integer numbers; CTF floating point numbers as JSON integer numbers for mandissa/exponent parts (to keep precision that would be lost by using JSON floating point numbers).

To fully understand the developed JSON schema, we use the same subsection names as in Binary CTF anatomy.

Stream files

All streams of a JSON CTF trace fit into the same file, which by convention has a .json extension. So: a binary CTF trace is a directory whereas a JSON CTF trace is a single file. There is no such thing as a "stream file" in JSON CTF. The JSON file looks like this:

{ "metadata": "we will see this later", "packets": [ "next subsection" ] }

The packets node is an array of packet nodes which are ordered by first time stamp (this is found in their context).

Binary CTF stream files can still be rebuilt from a JSON CTF trace since a packet header contains its stream ID. It's just a matter of reading the packets objects in order, converting them to binary CTF, and moving them to the appropriate file according to the stream ID and the CPU ID.

Packets

Packet nodes (which are elements of the aforementioned packets JSON array) look like this:

{ "header": { }, "context": { }, "events": [ "event nodes here" ] }

Of course, the header and context fields contain the appropriate structures.

The events node is an array of event nodes.

Here is a real world example:

{ "header": { "magic": 3254525889, "uuid": [30, 20, 218, 86, 124, 245, 157, 64, 183, 255, 186, 197, 61, 123, 11, 37], "stream_id": 0 }, "context": { "timestamp_begin": 1735904034660715, "timestamp_end": 1735915544006801, "events_discarded": 0, "content_size": 2096936, "packet_size": 2097152, "cpu_id": 0 }, "events": [ ] }

In this last example, event nodes are omitted to save on space. The key names of nodes header and context are the exact same ones that are declared into the metadata text.

The context node is optional and may be absent if there's no packet context.

Events

Event nodes (which are elements of the aforementioned events JSON array) have a structure that's easy to guess: header, optional per-stream context, optional per-event context and payload. Nodes are named like this:

{ "header": { }, "streamContext": { }, "eventContext": { }, "payload": { } }

Here is a real world example:

{ "header": { "id": 65535, "v": { "id": 34, "timestamp": 1735914866016283 } }, "payload": { "_comm": "lttng-consumerd", "_tid": 31536, "_delay": 0 } }

No context is used in this particular example. Again, the key names of nodes header, streamContext, eventContext and payload are the exact same ones that are declared into the metadata text.

Metadata file

Back to the JSON CTF root node. It contains two keys: metadata and packets. We already covered packets in section Packets. The metadata node is a single JSON string. It's either external:somefile.tsdl, in which case file somefile.tsdl must exist in the same directory and contain the whole metadata plain text, or the whole metadata text in a single string. The latter means all new lines and tabs must be escaped with \n and \t, for example.

Since the metadata text of a given trace may be huge (often several hundreds of kilobytes), it might be a good idea to make it external for human readability. However, if portability is the primary concern, having a single JSON text file is still possible using this technique.

There is never going to be a collision between the string external: and the beggining of a real TSDL metadata text since external is not a TSDL keyword.

org.eclipse.linuxtools.ctf.core

This section describes the Java software architecture of org.eclipse.linuxtools.ctf.core. This package and all its subpackages contain code to read/write both binary CTF and JSON CTF formats, translate from one to another and support other input/output languages.